AI and Deception Reading Group

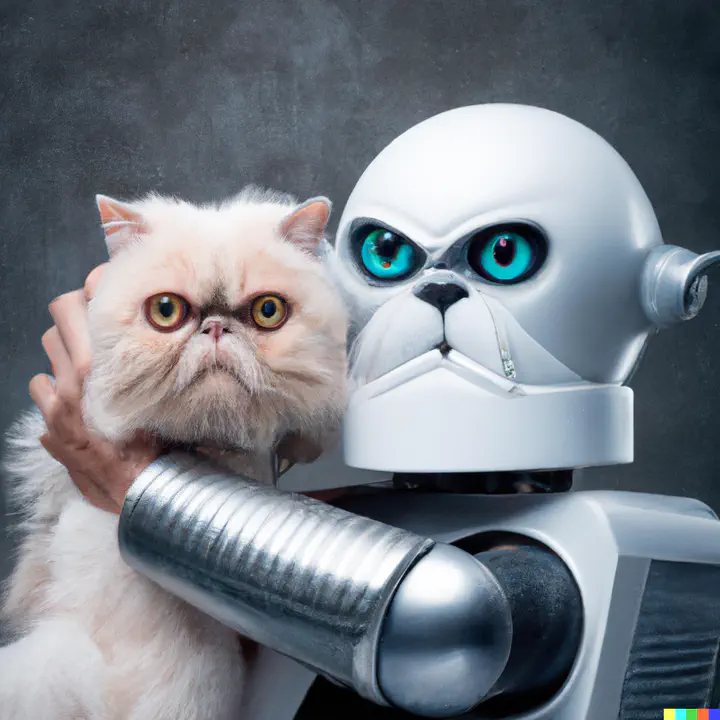

Image created with DALL·E by OpenAI (prompt: a photo of a robot that looks like dr evil holding a persian cat)

Image created with DALL·E by OpenAI (prompt: a photo of a robot that looks like dr evil holding a persian cat)As social robots become more prevalent, some worry about the nature of the relationship between humans and social robots. For instance, humans have a tendency to attribute complex cognitive and emotional capacities to objects (which often leads to them empathizing with such objects). This tendency could be used to deceive humans into thinking that robots have more cognitive and emotional capacities than they actually do. In what instances does such deception occur? Is it morally problematic? What does this mean for relationships (and friendships) between humans and, say, care robots or sex robots?

Moreover, there has recently been some work on the question of whether robots themselves can lie to us or in other ways deceive us, or whether they can only be used by other humans to do so. What does it mean for anyone to deceive us and can this be applied to robots? What requirements do chatbots for example have to meet, such that it would be possible for them to mislead or trick us?

Date and Time of first meeting: October 27 at 4 PM CEST, online.

Contact Michael Dale or Guido Löhr to join the group or receive updates.